The ongoing debate over online misinformation and social media has highlighted a significant divide between public discourse and scientific research. Public figures and journalists frequently assert the widespread impact of false online content, often contradicting current empirical evidence. In a new study published in Nature researchers have identified three main misconceptions: the overestimation of average exposure to problematic content, the perceived dominance of algorithms in this exposure, and the belief that social media is the primary driver of broader social issues such as polarization.

Behavioral science research shows that exposure to false and inflammatory content is generally low, concentrated among a small, highly motivated group. Given this, experts recommend holding platforms accountable for enabling access to extreme content, particularly where consumption is highest and the risk of real-world harm is significant. They also call for greater transparency from these platforms and collaboration with external researchers to better understand and mitigate the effects of online misinformation.

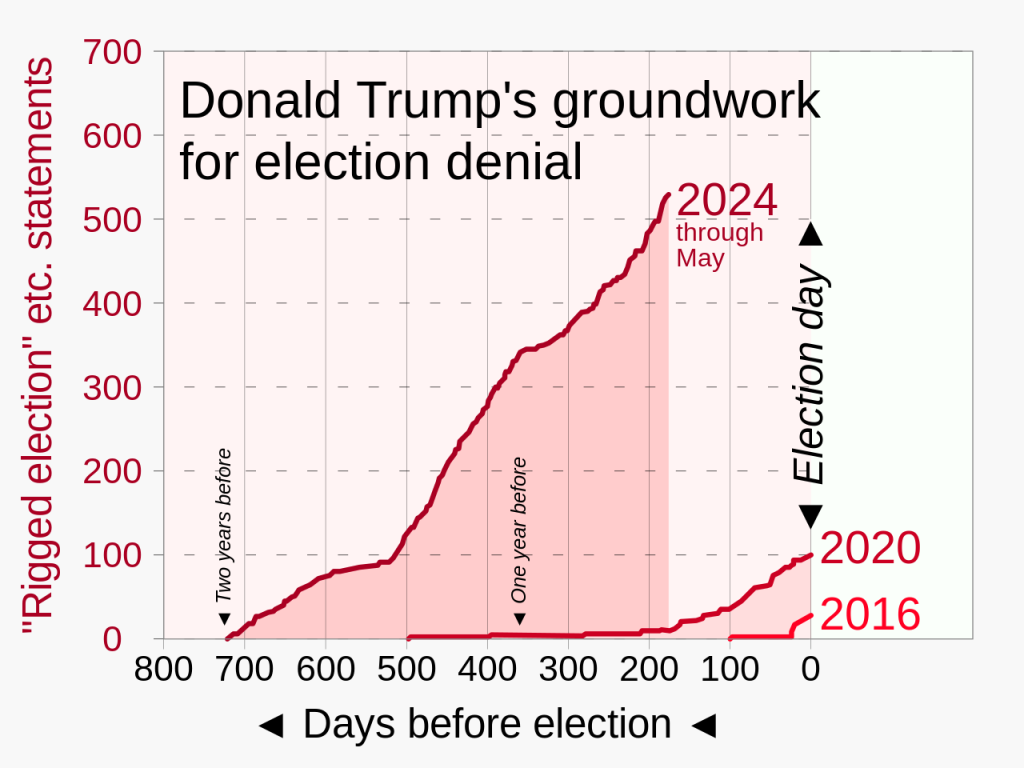

This issue is particularly urgent in regions outside the USA and Western Europe, where data is limited, and the potential harms could be more severe. Facts like the reality of the Holocaust, the life-saving impact of COVID-19 vaccines, and the legitimacy of the 2020 US presidential election are indisputable but still hotly contested in some online communities. The spread of false information has led to increased vaccine hesitancy and heightened political polarization.

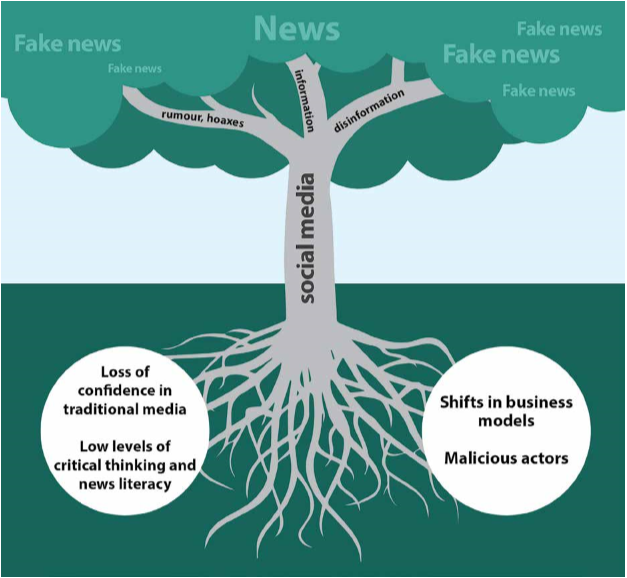

Misinformation poses a significant threat to democracy. Research by Ceren Budak from the University of Michigan reveals that public perceptions often overestimate both the extent of exposure to misinformation and the influence of algorithms. This narrow focus on social media overlooks wider societal and technological trends that contribute to misinformation. Effective intervention requires platforms and regulators to act on evidence about how misinformation spreads and why, particularly in diverse global contexts.

Historically, fake news was spread by print publications. The role of social media platforms in spreading misinformation is evident in a study by David Lazer and colleagues. Analyzing Twitter activity during the 2020 US presidential election, they found that only a small fraction of users shared misinformation. A significant drop in misinformation sharing followed Twitter’s ban of 70,000 users after the January 6 Capitol attack, demonstrating that enforcing terms of use can effectively counter misinformation.

Fake news such as the holocaust never happened somehow gained traction, despite the overwhelming evidence of it being an irrefutable fact, Facebook has taken counter measures on that front. However, such interventions are becoming increasingly challenging. Since Elon Musk acquired Twitter, now rebranded as X, the platform has reduced content moderation and limited researchers’ access to data. This lack of transparency extends to the funding mechanisms of misinformation. The ad-funded model of the web has inadvertently boosted misinformation production, with companies often unaware that their ads appear on misinformation sites due to automated advertising exchanges.

Research by Wajeeha Ahmad at Stanford University highlights this issue, showing that companies are more likely to advertise on misinformation sites through these exchanges. This opacity hampers efforts to understand and counter misinformation. Increased engagement between companies and researchers is essential for ethical data collaboration and effective intervention without infringing on free speech.

The rise of generative AI applications, which lower the barriers to creating dubious content, further underscores the need for urgent action. Studies indicate that while AI-generated misinformation is not yet prevalent, it is likely only a matter of time. The global community shares a common interest in curbing misinformation to maintain evidence-based public debate. Effective measures must be tested, and independent researchers need access to data to inform these efforts.

With major elections involving around four billion people this year, the integrity of democratic processes is at risk from misinformation. Effective democracy relies on informed citizens and evidence-based discourse. False information can increase polarization and undermine trust in electoral processes, making it imperative to counter misinformation proactively.

Interventions such as psychological inoculation, accuracy prompts, and educational campaigns can help. These measures, combined with platform accountability and regulatory action, can mitigate the spread and impact of misinformation. Protecting democracy requires a concerted effort to ensure public discourse is grounded in evidence and facts, empowering citizens to make informed decisions.